Bitmain Antminer S19 Pro 110Th/s 3010W

April 8, 2024

NVIDIA L40 & L40S Enterprise 48GB

April 8, 2024NVIDIA A100 Enterprise PCIe 40GB/80GB

The NVIDIA A100 Tensor Core GPU delivers unprecedented acceleration—at every scale—to power the world’s highest performing elastic data centers for AI, data analytics, and high-performance computing (HPC) applications. 3-year manufacturer warranty included.

| CUDA Cores | 6912 |

|---|---|

| Streaming Multiprocessors | 108 |

| Tensor Cores | Gen 3 | 432 |

| GPU Memory | 40 GB or 80 GB HBM2e ECC on by Default |

| Memory Interface | 5120-bit |

| Memory Bandwidth | 1555 GB/s |

| NVLink | 2-Way, 2-Slot, 600 GB/s Bidirectional |

| MIG (Multi-Instance GPU) Support | Yes, up to 7 GPU Instances |

| FP64 | 9.7 TFLOPS |

| FP64 Tensor Core | 19.5 TFLOPS |

| FP32 | 19.5 TFLOPS |

| TF32 Tensor Core | 156 TFLOPS | 312 TFLOPS* |

| BFLOAT16 Tensor Core | 312 TFLOPS | 624 TFLOPS* |

| FP16 Tensor Core | 312 TFLOPS | 624 TFLOPS* |

| INT8 Tensor Core | 624 TOPS | 1248 TOPS* |

| Thermal Solutions | Passive |

| vGPU Support | NVIDIA Virtual Compute Server (vCS) |

| System Interface | PCIE 4.0 x16 |

Ships in 10 days from payment. All sales final. No cancellations or returns.

$7,600.00 – $13,200.00

Accelerating the Most Important Work of Our Time

NVIDIA A100 Tensor Core GPU delivers unprecedented acceleration at every scale to power the world’s highest-performing elastic data centers for AI, data analytics, and HPC. Powered by the NVIDIA Ampere Architecture, A100 is the engine of the NVIDIA data center platform. A100 provides up to 20X higher performance over the prior generation and can be partitioned into seven GPU instances to dynamically adjust to shifting demands. The A100 80GB debuts the world’s fastest memory bandwidth at over 2 terabytes per second (TB/s) to run the largest models and datasets.

Deep Learning Training

Up to 3X Higher AI Training on Largest Models

DLRM Training

DLRM on HugeCTR framework, precision = FP16 | NVIDIA A100 80GB batch size = 48 | NVIDIA A100 40GB batch size = 32 | NVIDIA V100 32GB batch size = 32.

AI models are exploding in complexity as they take on next-level challenges such as conversational AI. Training them requires massive compute power and scalability.

NVIDIA A100 Tensor Cores with Tensor Float (TF32) provide up to 20X higher performance over the NVIDIA Volta with zero code changes and an additional 2X boost with automatic mixed precision and FP16. When combined with NVIDIA® NVLink®, NVIDIA NVSwitch™, PCI Gen4, NVIDIA® InfiniBand®, and the NVIDIA Magnum IO™ SDK, it’s possible to scale to thousands of A100 GPUs.

A training workload like BERT can be solved at scale in under a minute by 2,048 A100 GPUs, a world record for time to solution.

For the largest models with massive data tables like deep learning recommendation models (DLRM), A100 80GB reaches up to 1.3 TB of unified memory per node and delivers up to a 3X throughput increase over A100 40GB.

NVIDIA’s leadership in MLPerf, setting multiple performance records in the industry-wide benchmark for AI training.

Deep Learning Inference

A100 introduces groundbreaking features to optimize inference workloads. It accelerates a full range of precision, from FP32 to INT4. Multi-Instance GPU (MIG) technology lets multiple networks operate simultaneously on a single A100 for optimal utilization of compute resources. And structural sparsity support delivers up to 2X more performance on top of A100’s other inference performance gains.

On state-of-the-art conversational AI models like BERT, A100 accelerates inference throughput up to 249X over CPUs.

On the most complex models that are batch-size constrained like RNN-T for automatic speech recognition, A100 80GB’s increased memory capacity doubles the size of each MIG and delivers up to 1.25X higher throughput over A100 40GB.

NVIDIA’s market-leading performance was demonstrated in MLPerf Inference. A100 brings 20X more performance to further extend that leadership.

Up to 249X Higher AI Inference Performance

Over CPUs

BERT-LARGE Inference

BERT-Large Inference | CPU only: Xeon Gold 6240 @ 2.60 GHz, precision = FP32, batch size = 128 | V100: NVIDIA TensorRT™ (TRT) 7.2, precision = INT8, batch size = 256 | A100 40GB and 80GB, batch size = 256, precision = INT8 with sparsity.

Up to 1.25X Higher AI Inference Performance

Over A100 40GB

RNN-T Inference: Single Stream

MLPerf 0.7 RNN-T measured with (1/7) MIG slices. Framework: TensorRT 7.2, dataset = LibriSpeech, precision = FP16.

High-Performance Computing

To unlock next-generation discoveries, scientists look to simulations to better understand the world around us.

NVIDIA A100 introduces double precision Tensor Cores to deliver the biggest leap in HPC performance since the introduction of GPUs. Combined with 80GB of the fastest GPU memory, researchers can reduce a 10-hour, double-precision simulation to under four hours on A100. HPC applications can also leverage TF32 to achieve up to 11X higher throughput for single-precision, dense matrix-multiply operations.

For the HPC applications with the largest datasets, A100 80GB’s additional memory delivers up to a 2X throughput increase with Quantum Espresso, a materials simulation. This massive memory and unprecedented memory bandwidth makes the A100 80GB the ideal platform for next-generation workloads.

11X More HPC Performance in Four Years

Top HPC Apps

Geometric mean of application speedups vs. P100: Benchmark application: Amber [PME-Cellulose_NVE], Chroma [szscl21_24_128], GROMACS [ADH Dodec], MILC [Apex Medium], NAMD [stmv_nve_cuda], PyTorch (BERT-Large Fine Tuner], Quantum Espresso [AUSURF112-jR]; Random Forest FP32 [make_blobs (160000 x 64 : 10)], TensorFlow [ResNet-50], VASP 6 [Si Huge] | GPU node with dual-socket CPUs with 4x NVIDIA P100, V100, or A100 GPUs.

Up to 1.8X Higher Performance for HPC Applications

Quantum Espresso

Quantum Espresso measured using CNT10POR8 dataset, precision = FP64.

High-Performance Data Analytics

2X Faster than A100 40GB on Big Data Analytics Benchmark

Big data analytics benchmark | 30 analytical retail queries, ETL, ML, NLP on 10TB dataset | V100 32GB, RAPIDS/Dask | A100 40GB and A100 80GB, RAPIDS/Dask/BlazingSQL

Data scientists need to be able to analyze, visualize, and turn massive datasets into insights. But scale-out solutions are often bogged down by datasets scattered across multiple servers.

Accelerated servers with A100 provide the needed compute power—along with massive memory, over 2 TB/sec of memory bandwidth, and scalability with NVIDIA® NVLink® and NVSwitch™, —to tackle these workloads. Combined with InfiniBand, NVIDIA Magnum IO™ and the RAPIDS™ suite of open-source libraries, including the RAPIDS Accelerator for Apache Spark for GPU-accelerated data analytics, the NVIDIA data center platform accelerates these huge workloads at unprecedented levels of performance and efficiency.

On a big data analytics benchmark, A100 80GB delivered insights with a 2X increase over A100 40GB, making it ideally suited for emerging workloads with exploding dataset sizes.

Enterprise-Ready Utilization

7X Higher Inference Throughput with Multi-Instance GPU (MIG)

BERT Large Inference

BERT Large Inference | NVIDIA TensorRT™ (TRT) 7.1 | NVIDIA T4 Tensor Core GPU: TRT 7.1, precision = INT8, batch size = 256 | V100: TRT 7.1, precision = FP16, batch size = 256 | A100 with 1 or 7 MIG instances of 1g.5gb: batch size = 94, precision = INT8 with sparsity.

A100 with MIG maximizes the utilization of GPU-accelerated infrastructure. With MIG, an A100 GPU can be partitioned into as many as seven independent instances, giving multiple users access to GPU acceleration. With A100 40GB, each MIG instance can be allocated up to 5GB, and with A100 80GB’s increased memory capacity, that size is doubled to 10GB.

MIG works with Kubernetes, containers, and hypervisor-based server virtualization. MIG lets infrastructure managers offer a right-sized GPU with guaranteed quality of service (QoS) for every job, extending the reach of accelerated computing resources to every user.

| Memory | 40GB, 80GB |

|---|

Related products

-

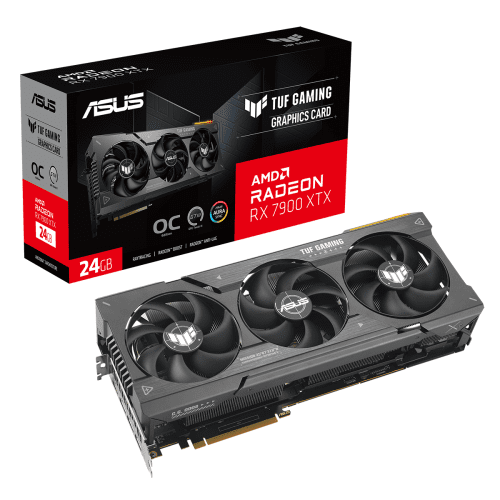

ASUS TUF Gaming Radeon RX 7900 XTX OC Edition 24GB GDDR6

- Axial-tech fans scaled up to deliver 14% more airflow

- Dual ball fan bearings last up to twice as long as conventional designs

- Military-grade capacitors rated for 20K hours at 105C make the GPU power rail more durable

- Metal Exoskeleton adds structural rigidity and vents to increase heat dissipation

- Auto-Extreme precision automated manufacturing for higher reliability

- GPU Tweak III software provides intuitive performance tweaking, thermal controls, and system monitoring

$1,199.00Original price was: $1,199.00.$995.00Current price is: $995.00. -

GIGABYTE GeForce RTX 4070 Ti WINDFORCE OC 12G

$1,049.00A Powerful Offering for Gaming Enthusiasts

GIGABYTE, a renowned brand in the world of gaming hardware, has introduced their latest graphics card, the GV-N407TWF3OC-12GD from their WINDFORCE series. Designed to meet the demands of avid gamers, this powerhouse is equipped with top-notch features that deliver an exceptional gaming experience. Powered by the NVIDIA GeForce RTX 4070 Ti GPU, the card boasts a core clock speed of 2625 MHz and an impressive 7680 CUDA cores, ensuring smooth and immersive gameplay. With 12GB of GDDR6X memory and a 192-bit memory interface, it offers high-speed and efficient performance, enabling gamers to tackle the most demanding titles with ease.

GIGABYTE WINDFORCE GV-N407TWF3OC-12GD Delivers Cutting-Edge Gaming Performance

Combining style and performance, the GIGABYTE WINDFORCE GV-N407TWF3OC-12GD graphics card offers a winning combination for gamers seeking a competitive edge. The ATX form factor card features a sleek design and compact dimensions, with a maximum GPU length of 261mm and a slot width of 2.5 slots, making it compatible with a wide range of systems. Equipped with 4 multi-monitor support, including 1 HDMI port and 3 DisplayPort ports, it provides flexibility for multi-display setups, allowing users to immerse themselves in the action on multiple screens. Boasting a powerful thermal design power of 201W, the card’s triple fan cooling system ensures optimal heat dissipation, keeping temperatures in check even during intense gaming sessions.

-

ASUS TUF Gaming GeForce RTX 4070 Ti 12GB

$1,157.00ASUS TUF Gaming GeForce RTX™ 4070 Ti 12GB GDDR6X with DLSS 3, lower temps, and enhanced durability

Powered by NVIDIA DLSS3, ultra-efficient Ada Lovelace arch, and full ray tracing.

4th Generation Tensor Cores: Up to 4x performance with DLSS 3 vs. brute-force rendering

3rd Generation RT Cores: Up to 2X ray tracing performance

Axial-tech fans scaled up for 21% more airflow

Dual ball fan bearings last up to twice as long as conventional designs

Military-grade capacitors rated for 20K hours at 105C make the GPU power rail more durable

Metal exoskeleton adds structural rigidity and vents to increase heat reliability

Auto-Extreme precision automated manufacturing for higher reliability

GPU Tweak III software provides intuitive performance tweaking, thermal controls, and system monitoring

Reviews

There are no reviews yet.